AI Governance, Explainability & Compliance Services

We help EU-based finance, insurance, healthcare and B2B SaaS teams turn opaque AI into transparent, auditable and human-centric systems – aligned with the EU AI Act.

From black boxes to transparent intelligence.

Lumethica.ai We help European teams move from AI hype and isolated pilots to governed, documented and explainable AI. We design explainability so that you can defend AI-driven decisions in front of boards, auditors and regulators.

AI Governance Framework

Most organizations already experiment with AI – but lack explainability, documentation and a clear view of risks. That becomes a real problem the moment auditors, regulators or customers start asking: “Why did the model make this decision?”

AI Act Readiness

Lumethica helps you design AI systems that are traceable, documented and explainable by design – so your teams can defend model decisions, pass audits and build real trust in AI.

AI Governance & AI Act Readiness

Assess where you stand today – and what needs to change to meet EU AI Act and internal governance requirements. For EU organisations in finance, insurance, healthcare, energy and public services, AI governance is no longer optional.

We review your AI use cases, data flows and existing documentation against key AI Act obligations. Together with your legal, product and data teams we map roles (provider / deployer), risk categories and gaps in current processes.

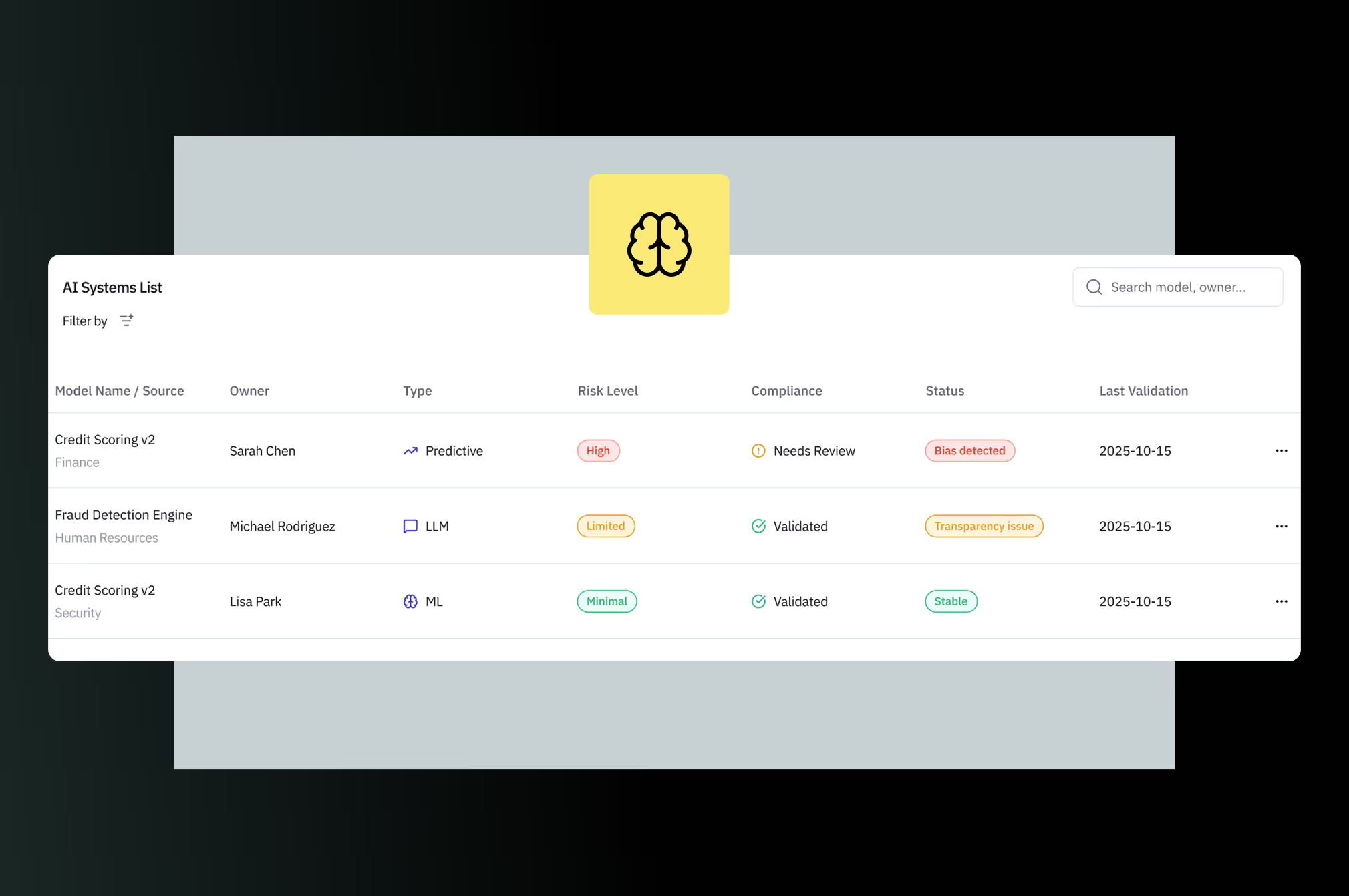

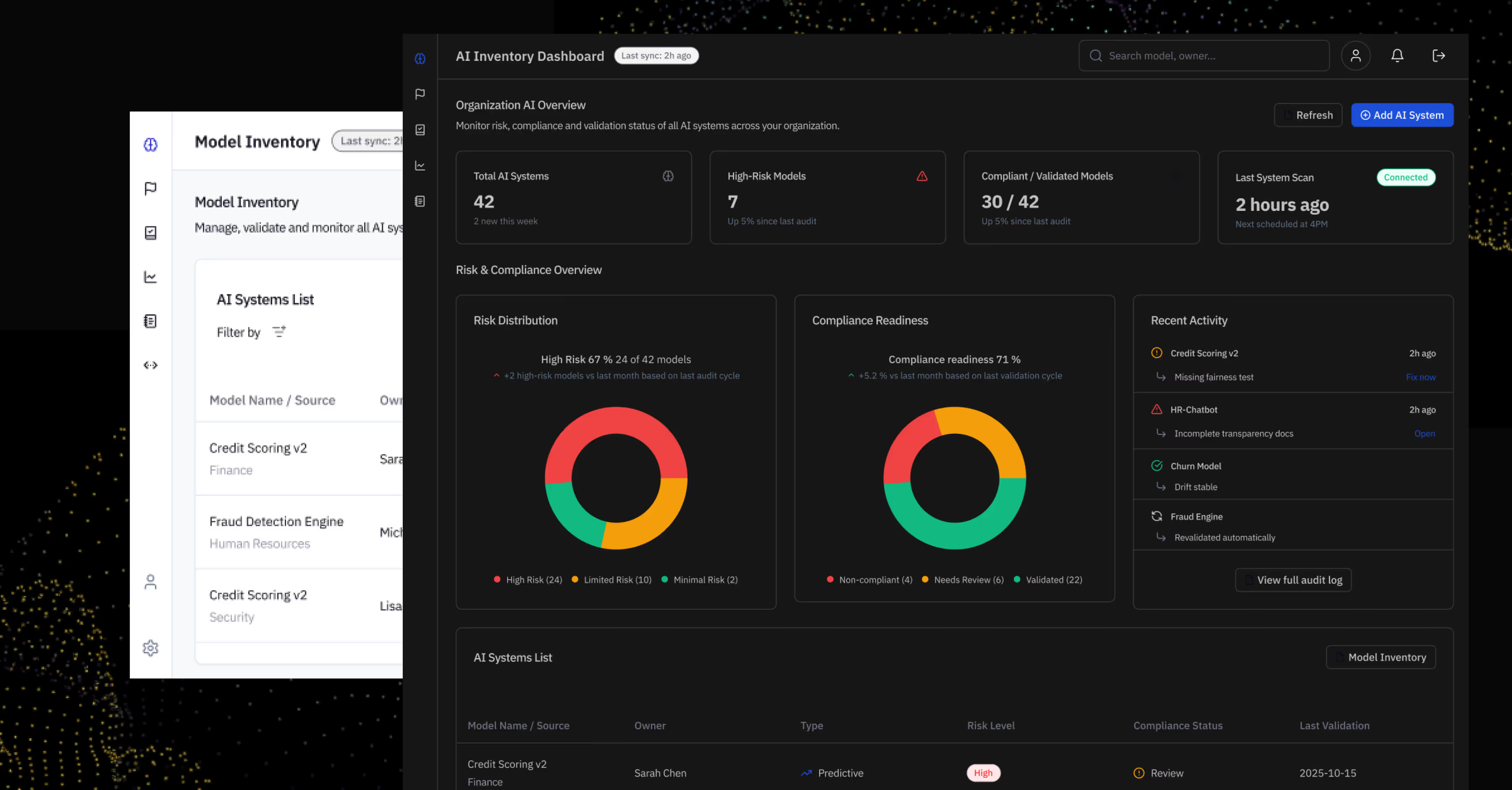

- AI Inventory: mapped list of models, use cases and risk levels.

- Readiness Scorecard: where you already comply, where you’re exposed.

- Governance Blueprint: recommended roles, processes and documentation to implement in the next 3–6 months.

- Executive-friendly summary that can be shared with management, auditors or investors.

- Playbooks for product, risk and data teams on how to work with AI responsibly.

- All findings are structured in a way that can be reused in your internal AI policies and AI Act technical documentation.

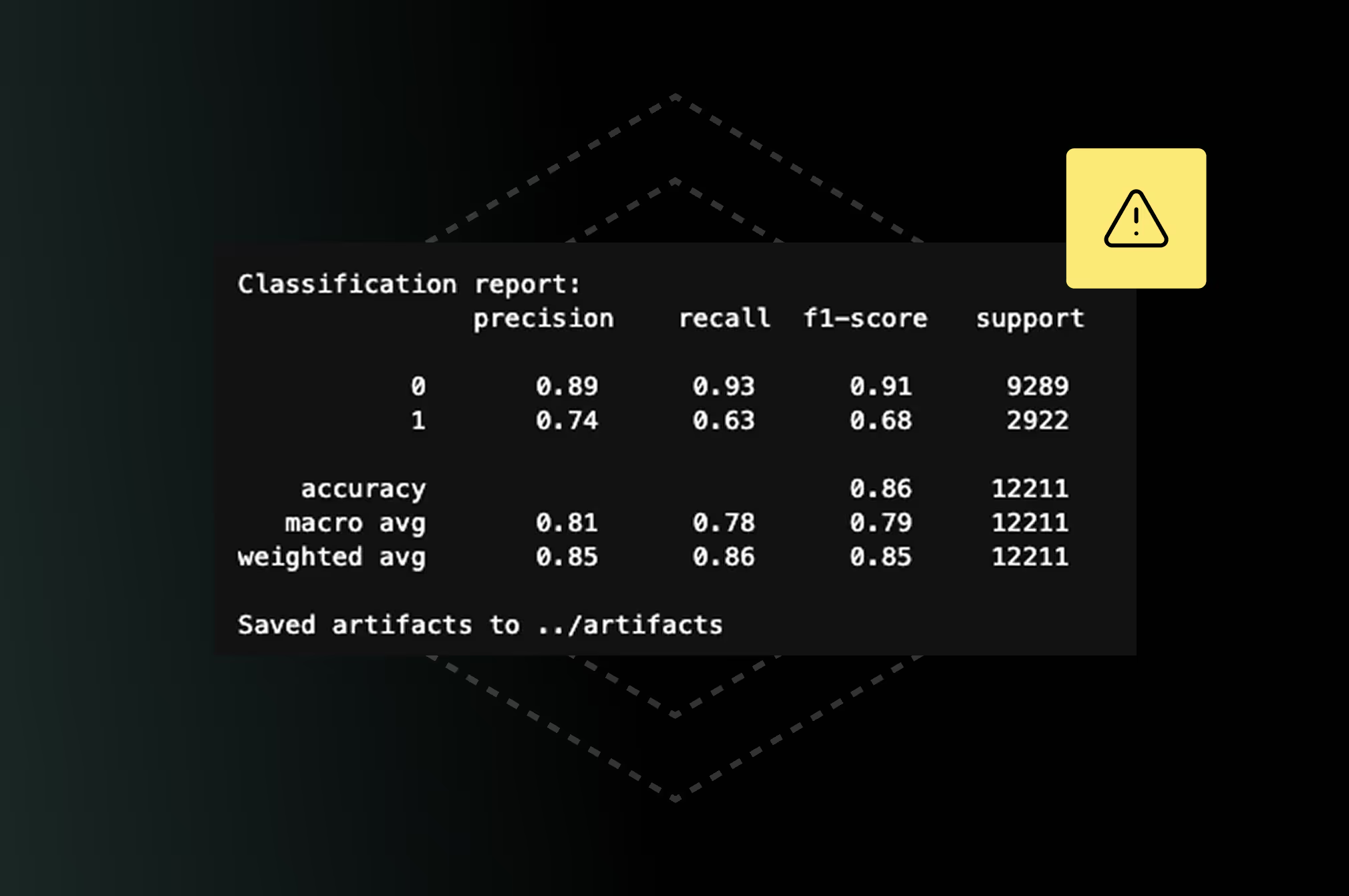

Model Risk & Explainability Snapshot

A focused audit of one priority model – with a visual report that your team can actually understand.

We take one of your key models (for example credit scoring, fraud detection or triage) and run a compact risk & explainability assessment using open-source tools (Fairlearn, Evidently, SHAP, LIME) and your existing predictions.

Why it matters for CFOs and CROs ? This snapshot gives you evidence – not opinions. You see where the model behaves reliably, where it drifts, and where bias might create legal or reputational risk. Instead of hoping “our AI is fine”, you have a concrete basis for decisions: retrain, monitor or limit usage.

- Visual risk & explainability dashboard (bias, drift, performance, stability).

- Clear findings: where the model behaves as expected, where there are risks or blind spots.

- Concrete recommendations: what to monitor, what to document, what to re-train.

- PDF report that can serve as part of your AI documentation or internal audit trail.

AI Explanation Design Sprint

Design the human-AI interface – dashboards, alerts and reports that make model decisions understandable.

In a design sprint we work with your product, data and risk teams to translate raw model outputs into human-friendly interfaces. We combine UX for complex data with XAI methods to define what different stakeholders need to see – and how.

- Mapped user journeys and explanation needs (analyst, manager, regulator, end-user).

- Clickable Figma prototype of an Explainable AI dashboard or report.

- Design guidelines for integrating explanations into your existing product.

- Backlog of implementation tasks for your product / data team.

You can use the resulting prototype directly in product roadmaps, stakeholder demos and investor conversations to show that your AI is explainable by design.

Education & Awareness

Build AI literacy inside your organization – from leadership to product and data teams.

Lumethica Academy delivers workshops and learning paths on AI governance, explainability and the EU AI Act. We bring together Jupyter-based demos, real model examples and clear language, so teams understand not only what the law says, but how it impacts their daily work.

Our programmes are designed for mixed groups – leadership, product, risk and data teams – so everyone shares the same mental model of AI risk and regulation.

- Tailored workshops: AI Act in practice, Explainability for Business, Bias & Drift 101.

- Practical notebooks and materials that your data team can reuse.

- Management-level briefing decks for boards, investors and auditors.

- Option to extend into an internal “AI Act Ready” program for your organization.

Why Lumethica

Let’s build AI systems that are transparent, accountable and aligned with human values. Whether you need governance strategy, an explainable interface or a model risk snapshot – Lumethica brings light to every layer of your intelligence stack.

Design-driven AI governance

Years of experience designing complex data products (Synerise, Salesmanago, Meniga, CloudFerro) – now focused on AI risk, explainability and compliance.

From metrics to human-readable stories

We translate bias, drift and performance metrics into visuals and narratives that non-technical stakeholders can understand and act on.

Built on open-source XAI tools

We work with trusted, transparent libraries (Fairlearn, SHAP, LIME, Evidently) – so you are not locked into another black-box platform.

Independent, niche and focused

We are a specialized studio – not a generic consultancy. You work directly with the designer responsible for your interface and your governance story. No new platform to integrate, no vendor lock-in – we work with your existing stack and open-source tools.

We connect data, design and regulation – translating AI into a language understood by people, business and law.

Book a Discovery Call →Illuminate your AI.

Let’s build AI systems that are transparent, accountable, and aligned with human values. Whether you need governance strategy, compliance audit, or explainability design — Lumethica brings light to every layer of intelligence.