Explainable Clinical AI That Doctors Can Trust

We design clinical XAI interfaces that turn your AI from a black box into a traceable, auditable decision-support tool – aligned with AI Act and MDR expectations.

Why clinical XAI UX now!

AI is already inside your diagnostic workflows, triage systems and risk scores.

But if clinicians, ethics committees and regulators cannot understand why a model suggests a given decision, adoption stalls and regulatory risk increases. Lumethica’s Clinical XAI UX Design Sprint helps medtech and healthtech teams build transparent, human-centred interfaces for high-risk clinical AI – without changing your underlying model.

High Risk AI

Clinical AI systems (diagnostics, triage, risk scoring) will increasingly fall under high-risk AI under the EU AI Act and overlap with MDR requirements.

New AI Regulations

Sectoral law (e.g. medical device regulations) does not fully cover AI-specific risks such as opacity, data drift or bias – regulators explicitly expect complementary AI governance and documentation.

Understandable explanations

Doctors and nurses are expected to remain in control, which requires understandable explanations, uncertainty indicators and clear override options in the UI – not just raw model scores.

- Doctors do not trust or actively ignore AI suggestions.

- Explanations are buried in technical documentation, not visible in their daily tools.

for clinical workflows

- Dashboards expose raw probabilities and feature importance, but not clinical narratives.

- Workflows do not reflect real decision paths, escalation rules or human oversight.

- The team lacks a coherent way to show ethics committees and regulators how the system supports human-in-the-loop decision-making and risk management.

- There is no reusable “explainability map” that connects model behaviour to clinical use cases.

Our solution

Clinical XAI UX Design Sprint

The Clinical XAI UX Design Sprint is an intensive engagement in which we co-design a complete explainability layer for one key clinical AI use case.

We focus on three perspectives at once:

- Clinicians – clear explanations, uncertainty, override patterns.

- Product & data teams – operational visibility into model behaviour.

- Regulators & ethics committees – traceability, human oversight, auditability.

“We had a strong imaging model, but zero traction with clinicians. Within a few weeks, Lumethica gave us a clinical dashboard that finally made sense to doctors and our MDR advisors. For the first time, we could walk into a hospital meeting and show how AI supports – not replaces – clinical judgement.”

Clinical XAI UX in 4 Steps

A structured, collaborative sprint that connects your existing clinical model with an explainable interface, clear human-oversight paths and evidence aligned with AI Act and MDR expectations.

Discovery & Risk Lens

- Stakeholder interviews (product, clinical, data science, regulatory).

- Alignment on clinical use case, risk profile and AI Act / MDR implications.

Clinical Journey & Explanation Map

- Mapping current clinical workflow and decision points.

- Designing the Clinical Model Explanation Map and oversight paths.

Interface Design & Prototyping

- Designing XAI UI patterns for global/local explanations, uncertainty and override.

- Building an interactive Figma prototype of the clinical and supervisory panels.

Review, Alignment & Handover

- Joint review with clinical and regulatory stakeholders.

- Delivery of prototype, pattern library and regulatory micro-brief.

Clinical Model Explanation Map

- A visual map of typical decision scenarios (e.g. CT triage, ICU risk scoring).

- How the model contributes, where clinicians remain in control, and what is logged.

Clinical XAI UI Pattern Library

- A set of reusable UI patterns: global & local explanations, uncertainty bands, confidence labels, counterfactuals, override flows.

- Designed for doctors, nurses and clinical coordinators – not just technical users.

Clickable Figma Prototype

- A high-fidelity prototype of the clinical panel and optionally a supervisory panel (for QA, quality & safety, ethics).

- Ready to hand over to your in-house product and engineering teams.

Regulatory Micro-Brief (AI Act + MDR)

- A concise note mapping the designed interface to key expectations around transparency, human oversight and audit trails.

Example use cases

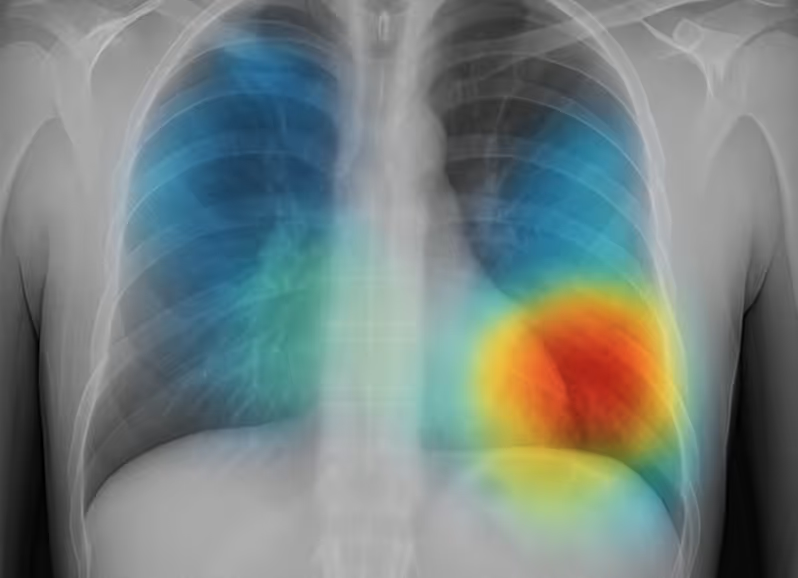

Explainable Imaging & Triage

Chest X-ray or CT triage model with explainable heatmaps and case-level narratives.

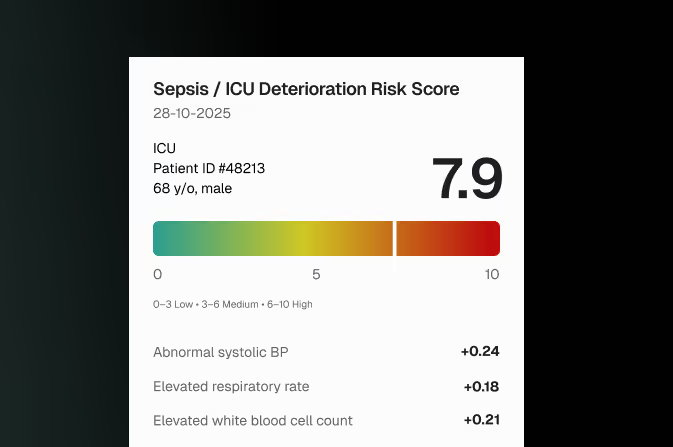

Transparent ICU Risk Scoring

Sepsis or ICU deterioration risk scores with transparent factor contributions and clinician override logs.

Oncology XAI Decision Support

Oncology decision-support systems that explain why certain cases are flagged as high-risk and how evidence was weighted.

Illuminate your AI.

Let’s build AI systems that are transparent, accountable, and aligned with human values. Whether you need governance strategy, compliance audit, or explainability design — Lumethica brings light to every layer of intelligence.