Clinical AI after the EU AI Act

Clinical AI after the EU AI Act: why explainability moved from “nice to have” to a regulatory requirement

If you are a C-level at a medtech company, a Head of Digital at a hospital, or a clinical AI product owner, you are probably facing a paradox:

- your organisation is investing heavily in AI for diagnostics and triage,

- yet clinicians still talk about “black boxes”,

- and legal/compliance keep asking how this fits the EU AI Act.

You are not alone.

The European Commission notes that AI-based software for medical purposes will be treated as high-risk AI and must comply with strict requirements on risk mitigation, high-quality data, human oversight and transparency. Public Health+2Artificial Intelligence Act EU+2 High-risk systems must be designed so that users can understand them and receive clear instructions on how to interpret outputs and limitations. Artificial Intelligence Act EU. At the same time, the AI in healthcare market is exploding: estimates suggest global revenues will exceed USD 110 billion by 2030, with Europe alone projected to grow from around USD 7.9 billion in 2024 to over USD 140 billion by 2033. MarketsandMarkets+1 A 2024 survey indicates that by the end of this year more than 60% of healthcare organisations in the EU plan to use AI for disease diagnosis. aiprm.com In short: investment and deployment are accelerating, while expectations on explainability and governance are tightening.

The gap: powerful models, weak explainability

Recent reviews of explainable AI in healthcare show a consistent pattern: explanations can improve clinician trust and diagnostic confidence, but they often increase cognitive load and are misaligned with how clinicians actually reason. arXiv+2ScienceDirect+2 Another study stresses that a lack of transparency in AI systems raises legal and ethical challenges and directly affects reliance on AI for patient care. SpringerLink+1

Most teams I speak to recognise at least one of these issues:

- Clinicians see AI as a black box Model performance looks good in internal benchmarks, but doctors do not understand why a case was flagged. Explanations, if they exist, live in technical documentation—not in the tools clinicians use every day.

- Interfaces are built for data scientists, not for clinical workflows Dashboards show probabilities, AUC and feature importance plots. They rarely reflect real decision paths, escalation rules, or how a consultant, radiologist or ICU physician thinks about risk.

- AI Act & MDR discussions are detached from the UI Regulatory and ethics conversations happen in separate slide decks and Word documents. There is no single, shared interface that demonstrates human oversight, traceability and limitations in a way that both clinicians and regulators can understand.

This is where explainability UX becomes a strategic capability—not just a UI refresh.

Introducing the Clinical XAI UX Design Sprint

At Lumethica, we built the Clinical XAI UX Design Sprint for one specific purpose:

Turn your existing clinical AI model into a transparent, audit-ready interface that clinicians, hospitals and regulators can trust – without changing the model itself, only the way it is explained and governed.

We focus on teams that already have a working model in areas such as:

- imaging (X-ray, CT, MRI)

- triage and prioritisation

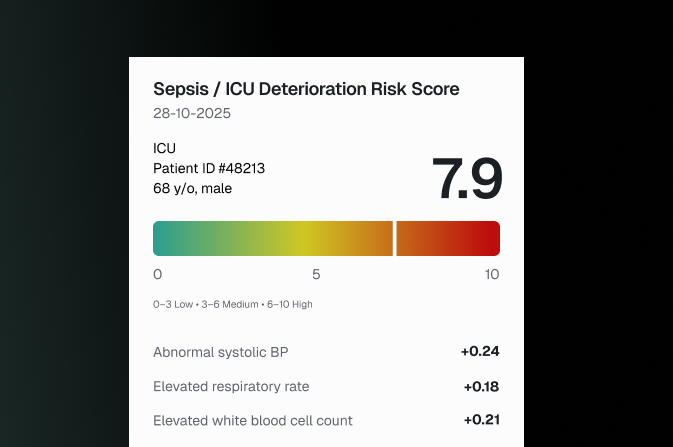

- ICU or sepsis risk scoring

- oncology decision support

Who is this for?

- Medtech and healthtech companies building AI products that will be deployed in EU healthcare systems.

- Hospitals and clinical networks piloting AI decision-support systems and preparing for AI Act and MDR conformity.

- Clinical AI product owners, Chief Medical Officers and AI leads who need a concrete, design-driven path from “good model” to “deployable, explainable system”.

What actually happens in the sprint?

Instead of generic “consulting”, the sprint is built around three tangible outcomes:

- Clinical Model Explanation Map We work with your product, data science and clinical stakeholders to map:

- Clinical XAI Interface & Pattern Library (Figma) We design a clinical panel and, where relevant, a supervisory panel for QA or ethics teams, including:

- Oversight & Governance Brief Finally, we provide a concise, non-marketing brief that links the interface design to:

Why this matters now

Three dynamics are converging:

- Regulation is catching up with deployment. The AI Act is now in force and explicitly treats many clinical AI systems as high-risk, with obligations that sit on top of existing MDR/IVDR rules. Public Health+2Artificial Intelligence Act EU+2

- Investment and adoption are accelerating. European AI-in-healthcare spending is forecast to grow almost twenty-fold over the next decade, and most EU healthcare organisations either already use or plan to use AI for diagnosis. Market Data Forecast+1

- Trust will become the bottleneck. Studies repeatedly show that clinicians hesitate to rely on opaque systems, and that explainability—if poorly designed—can actually increase cognitive load instead of reducing it. arXiv+2SpringerLink+2

In this environment, “we have a strong model” is no longer enough. What will differentiate successful clinical AI products is the ability to show, in one interface:

- what the model is doing,

- how it supports (but does not replace) clinical judgement,

- how the organisation manages risk and fulfils AI Act obligations.

A practical next step

If you are responsible for a clinical AI initiative and recognise some of these challenges—black-box perception, stalled adoption, or growing AI Act pressure there is a pragmatic way forward that does not start with rebuilding your model.

It starts with designing the explainability layer: the combination of UX, content and governance that lets clinicians, hospitals and regulators see how your AI works, where it fits into the workflow, and what safeguards are in place.

That is exactly what we focus on in the Clinical XAI UX Design Sprint at Lumethica.

👉 If you would like to explore whether this is a fit for your product or pilot, feel free to message us directly on LinkedIn or reach out via lumethica.ai.

We can walk through your current model, risk profile and regulatory context and see how an explainability-first approach could unlock trusted deployment under the AI Act.

Let’s Build Responsible Intelligence Together.

If your AI influences who gets credit, treatment, coverage or access – you can’t afford black-box decisions.

Lumethica helps you design AI that is transparent for your teams, defensible for your board and understandable for regulators.